Monitoring Search Operations

Problem - A Flood of Searches

A client sees 1,000,000 queries in their usage panel, but they only have 100 users. There’s a great chance that something is wrong.

They initially suspect spam or malicious users. They also consider bugs in their solution. They wonder if there is a runaway loop triggering millions of search requests. Maybe they’re sending too many empty requests, triggered by automatic refreshes. They contact support to help them debug.

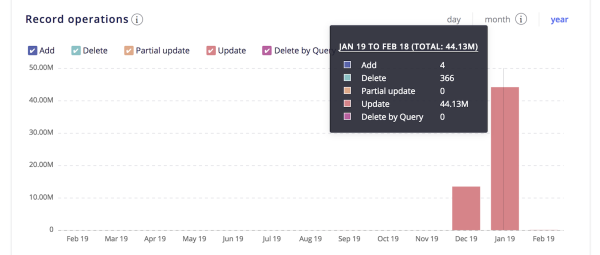

Investigation is needed. They start with the Dashboard’s Monitoring page.

With this view, they confirm that there is indeed a spike. But it doesn’t tell them why.

Cause - Google Bots

While there are many reasons for an increase in search operations, the one that concerns us here is caused by Google bots. When Google crawls your website, it has the potential to trigger events that execute queries. For some sites, this might not be a problem, causing only a few unintended searches. But for other sites, it can cause a flood of query requests.

One common reason for this is refinements or facets. Many clients create separate URLs for all of their facet values. Thus, if Google crawls a website, it will trigger a separate URL for every refinement. See the example below for more on this use case.

Investigation

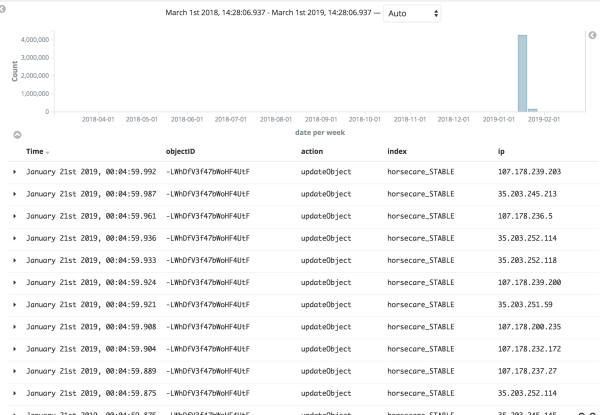

Get a list of IPs

To validate that a bot is triggering all the unexpected operations, you can go to your Algolia dashboard in the “Indices” > “Search API Logs” section. From there, you can dig into every search request and get the associated IP or see if there’s one IP that is always doing the same request. Most requests are coming from a search bot like Googlebot (the bot Google uses to crawl the internet and build their search engine).

Note: You can do the same with the get-logs API method.

Identify the source of the IPs

Check the IP using a service such as GeoIP. This traces the search back to Google, which confirms Google is behind the increase. Here’s a tool to help you trace IP addresses back to their source.

Solution - Exclude URLs from Google Bots

Initial Approach

-

Your web host/infrastructure provider is a good point of contact about mitigating the bot searches on your website.

-

On CMS such as Magento or WordPress, each platform has marketplace offerings focused on bots.

Inform Google

- Inform Googlebot to not go to your search pages at all with a well configured robots.txt. You can refer to this guide by Google. What some websites do is let Google go on their home search page but not allow them to go any further (like going on all the pages).

A Google Trick

- implement the latest Google reCAPTCHA, which is a very efficient way to protect yourself against bots.

Cloudflare

- Cloudflare has good measures against bot abuse as well.

Putting it all together - A real-life example

You see 1M queries but you only have 10 users!

-

You use Cloudflare to identify the source of traffic -> It was clearly the Google bot.

-

On your page, every sort + refinement is a crawlable link -> so with 30 different filters that results in basically 30^30 crawlable pages. Google just indexed the site for three days straight, resulting in 150k+ query operations.

-

You fixed it by adding the appropriate command to the Robots Exclusion Protocol via the robots.txt file. The general approach was to disallow each refinement and sort.

-

For example, you went to your Algolia search page and proceeded to filter results by clicking on refinements and sorts. This produced a URL of https://mywebsite.com/?age_group_map=7076&color_map=5924&manufacturer=2838&price=150-234&size=3055&product_list_order=new.

The resulting robots.txt file could be:

1

2

3

4

5

6

7

8

User-agent: * // this * means this section applies to all robots

// You need a separate Disallow line for every URL prefix you want to exclude

Disallow: /?*age_group_map // not permitted to crawl a URL with query param refinement

Disallow: /?*color_map // not permitted to crawl a URL with query param color_map

Disallow: /?*manufacturer // not permitted to crawl a URL with query param manufacturer

Disallow: /?*price // not permitted to crawl a URL with query param price

Disallow: /?*product_list_order // not permitted to crawl a URL with query param for this sort

For InstantSearch, you can do the same with this:

1

2

Disallow: /?*refinementList

Disallow: /?*sortBy

Other Solutions

Rate Limiting using Algolia API Keys

In Algolia, you can generate a new Search API Key with reduced queries per IP, per hour. But because bot IPs vary greatly, ultimately you would degrade your search performance for normal users by trying to manually block all of the bots in this way. If you would like to retrieve the most popular visitor IP addresses, you can find them in your logs.

The rate of the limit is up to you, we generally recommend starting with a higher number (in order to avoid limiting real users) and reduce it gradually based on the usage/fake bots. See rate limiting for more information.