What Is A/B Testing

On this page

We have leveraged two signature features - Relevance Tuning and Analytics - to create a new tool: A/B Testing.

Let’s see what this means:

- Relevance tuning lets you give your users the best search results.

- Analytics makes relevance tuning data-driven, ensuring that your configuration choices are sound and effective.

Relevance tuning, however, can be tricky. The choices are not always obvious. It is sometimes hard to know which settings to focus on and what values to set them to. It is also hard to know if what you’ve done is useful or not. What you need is input from your users, to test your changes live.

This is what A/B Testing does. It lets you: create two alternative search experiences with unique settings, put them both live, and see which one performs best.

Advantages of A/B Testing

With A/B Testing, you run alternative indices or searches in parallel, capturing Click and Conversion Analytics to compare effectiveness.

You make small incremental changes to your main index or search and have those changes tested - live and transparently by your customers - before making them official.

A/B Testing goes directly to an essential source of information - your users - by including them in the decision-making process, in the most reliable and least burdensome way.

These tests are widely-used in the industry to measure the usability and effectiveness of a website. Algolia’s focus is on measuring search and relevance: are your users getting the best search results? Is your search effective in engaging and retaining your customers? Is it leading to more clicks, more sales, more activity for your business?

Implementing A/B Testing

Algolia A/B Testing was designed with simplicity in mind. This user-friendliness enables you to perform tests regularly. Assuming you’ve set up Click and Conversion Analytics, A/B Testing doesn’t require any coding intervention. It can be managed from start to finish by people with no technical background.

Collect clicks and conversions

To perform A/B testing, you need to set up your Click and Conversion Analytics: this is the only way of testing how each of your variants is performing.

While A/B testing itself does not require coding, sending clicks and conversions requires coding.

Set up the index or query

We allow two kinds of A/B Tests:

- Test different index settings (applies to any parameter that can only be used in the

settingsscope). This requires creating replicas, which increases your record count. - Test different search parameters (no index setup required).

Run the A/B test

After creating or selecting your indices, you can start your A/B tests in two steps.

- Use the A/B test tab of the Dashboard to create your test,

- Start the A/B test.

After letting your test run and collect analytics, you can review, interpret, and act on the results. You can then create new A/B tests, iteratively optimizing your search!

Accessing A/B testing through the Dashboard

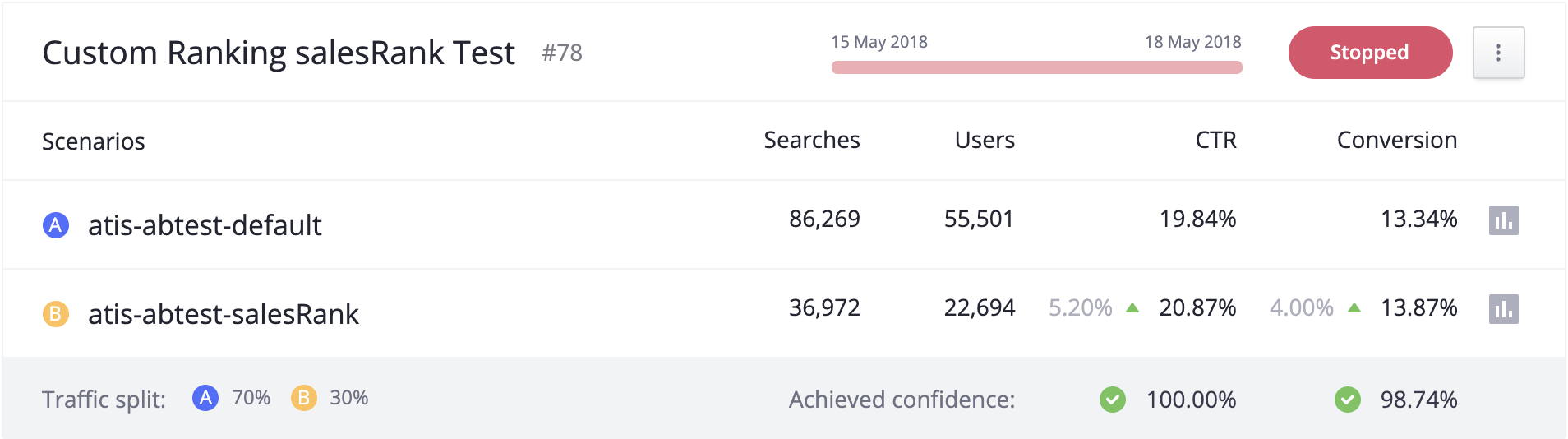

To access A/B testing analytics and create A/B tests, you should go through the A/B testing tab of the Dashboard. The A/B testing section provides very basic analytics, so you may want to get more information through the Analytics tab. However, because all search requests to an A/B test first target the primary (‘A’) index, viewing a test directly on the Analytics tab will include searches to both the A and B variants.

To let you view detailed analytics for each variant independently, we automatically add tags to A/B test indexes. To access the variant’s analytics, click on the small analytics icon on the right of each index description in the A/B test tab: it appears as a small bar graph in the figure above. The icon will automatically redirect you to the Analytics tab with the appropriate settings (time range and analyticsTags) applied.

Example A/B tests

As previously mentioned, we allow two kinds of A/B Tests:

- comparing different index settings,

- comparing different search settings.

For index-based testing, you can test:

- your index settings,

- your data format.

For search-based settings, you can test any search-time setting, including:

- typo tolerance,

- Rule enablement,

- optional filters,

- etc.

Example 1, Changing your index settings

Add a new custom ranking with the number_of_likes attribute

You’ve recently offered your users the ability to like your items, which include music, films, and blog posts. You’ve gathered “likes” data, and you’d like to use this information to sort your search results. However, before implementing such a big change, you want to make sure it improves your search. You decide to do this with A/B testing.

First you create your A/B test indices:

- You add a

number_of_likesattribute to your main catalog index (this is index A in your A/B test), and then create index B as a replica of A. You adjust index B’s settings by sorting it’s records based onnumber_of_likes.

Now, you go to the A/B testing tab of the Dashboard and create an A/B test.

- You name your test “Test new ranking with number_of_likes”.

- You want this test to run for 30 days, to be sure to get enough data and a good variety of searches, so you set the date parameters accordingly.

- You set B at only 10% usage, because of the uncertainty of introducing a new sorting: you don’t want to change the user experience for too many users until you’re absolutely sure the change is desirable.

- When your test reaches 95% confidence or greater, you can see whether your change improves your search, and whether the improvement is large enough to justify implementational cost.

Example 2, Reformatting your data

Add a new search attribute: short_description

Your company has added a new short description to each of your records. You want to see if setting short description as a searchable attribute improves your relevance.

First, you configure your test index (you only need one index for this test):

- Add the new attribute

short_descriptionto your main catalog index (this is A for the test). Because you are testing a search time setting for your index, you can use A as both indices in your test.

Now, you go to the A/B testing tab of the Dashboard and create an A/B test.

- You create a test called “Testing the new short description”.

- Create your A/B test with index A as both variants and set

short_descriptionas the varying searchable attribute. - You have enough traffic to know that 7 days of testing provides enough data to form a reasonable conclusion, so you set the test length to 7 days.

- For the same reason as example 1, you give B only 30% usage (70/30) - because of the uncertainty: you’d rather not risk degrading an already good search with an untested attribute.

- Once you reach 95% confidence, you can judge the improvement and the cost of implementation to see whether this change is beneficial.

Example 3, enabling/disabling Rules: compare a query with and without merchandising

You can use A/B testing to check the effectiveness of your Rules. This example tests search with Rules enabled against search with Rules disabled.

Your company has just received the new iphone. You want this item to appear at the top of the list for all searches that contain “apple” or “iphone” or “mobile”. You plan to use an A/B test to see whether putting the new iphone at the top of your results encourage traffic and sales.

First, create Rules for your main catalog index (this is A in the test) that promote your new iphone record.

Next, configure your test index (you only need one index for this test).

- Make sure that Rules are enabled for index A.

Lastly, go to the A/B testing tab of the Dashboard.

- Create a test called “Testing newly-released iphone merchandising”.

- Create your A/B test with index A as both variants. As the varying query parameter, add

enableRules. - You have enough traffic to know that 7 days is sufficient.

- For the same reason as example 1, you give B only 30% usage (70/30) - because of the uncertainty: you’d rather not risk degrading an already good search with an untested attribute.

- Once you reach 95% confidence, you can judge the improvement and the cost of implementation to see whether this change is beneficial.